Debunking Myths

In short:

Adoption is broad and fast. OpenAI’s usage study shows gender parity and much faster growth in low-income countries; Anthropic reports U.S. workplace use roughly doubling in two years. (OpenAI, Anthropic)

Reliability got a concrete path forward. OpenAI’s 4–5 Sept paper argues current training and evals reward guessing; align rewards to calibrated uncertainty and abstention to cut bluffing. (OpenAI)

Oversight is intensifying. The FTC opened an inquiry into “companion” chatbots; the U.S. Senate held a hearing after parental testimonies of harm. (FTC, US Senate)

Hello, and welcome back to the new school year. I wonder how many educators out there share my experience of encountering a vocal minority who still undersell AI, either by pretending it doesn’t exist or by listing its flaws and pointing to “Achilles’ heels” like the environmental costs of training models or societal risks like job displacement. These are of course valid concerns which are fair to surface, but they are also incomplete and dangerous because students, mass media, market participants, and the rest of society certainly are not waiting to adopt AI. If we want educators to make good decisions about AI, the least we need is up-to-date facts. Here are four September-fresh anecdotes—with links and explanations—for you to engage in positive discussions about AI.

1) Adoption is mainstream—and changing shape

As of 1 September, SimilarWeb ranks ChatGPT as the 5th most visited website in the world (up from 6th in August), placing it ahead of popular sites like X.com, Reddit, or Wikipedia; this popularity has been a sustained trend and it’s hard to argue that AI is just a fad.

OpenAI’s usage study released on 15 September analysed 1.5 million conversations and shows that early demographic gaps are closing: while users with typically feminine names only accounted for 37% of ChatGPT users in Jan 2024, the percentage had risen to 52% by July 2025. Growth in the lower-income countries also ran 4× the rate of the higher-income group, and this will no doubt increase as AI companies hand out subscriptions for free and launch affordable subscription tiers.

“Patterns of use can also be thought of in terms of Asking, Doing, and Expressing. About half of messages (49%) are “Asking,” a growing and highly rated category that shows people value ChatGPT most as an advisor rather than only for task completion. Doing (40% of usage, including about one third of use for work) encompasses task-oriented interactions such as drafting text, planning, or programming, where the model is enlisted to generate outputs or complete practical work. Expressing (11% of usage) captures uses that are neither asking nor doing, usually involving personal reflection, exploration, and play.” (OpenAI)

Anthropic’s September Economic Index paints a similar picture of rapid and widespread adoption: 40% of U.S. employees report using AI at work, up from 20% two years ago. This rate of growth is unprecedented:

“Historically, new technologies took decades to reach widespread adoption. Electricity took over 30 years to reach farm households after urban electrification. The first mass-market personal computer reached early adopters in 1981, but did not reach the majority of homes in the US for another 20 years. Even the rapidly-adopted internet took around five years to hit adoption rates that AI reached in just two years.” (Anthropic)

Why this matters: Like it or not, adoption is happening around school policies. If one truly believes that AI is a net negative to education or society, then it’s time to test and understand it intensively to list out where we want students to use or not use AI (I’m personally partial to separating assessments into 75% pure-human and 25% AI-augmented tracks). Schools must build staff AI literacy so choices are intentional, not incidental.

2) Hallucinations: we know how to fix it now

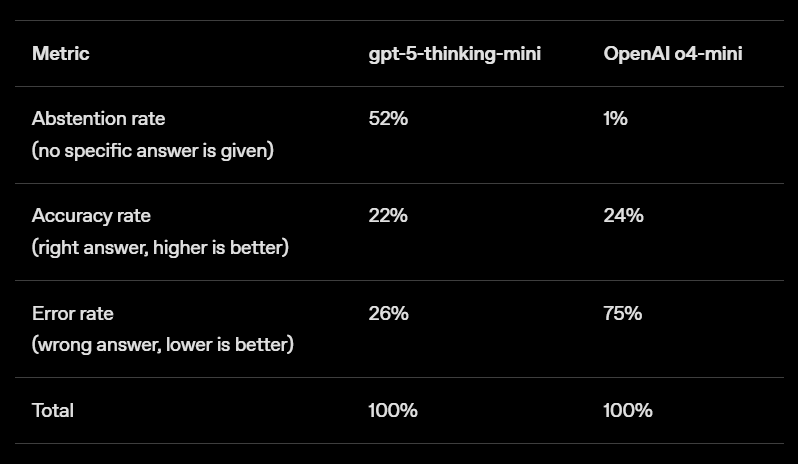

On 5 September, OpenAI published “Why Language Models Hallucinate.” The reason is actually quite simple:

“Hallucinations persist partly because current evaluation methods set the wrong incentives… Think about it like a multiple-choice test. If you do not know the answer but take a wild guess, you might get lucky and be right. Leaving it blank guarantees a zero. In the same way, when models are graded only on accuracy, the percentage of questions they get exactly right, they are encouraged to guess rather than say “I don’t know.”” (OpenAI)

The fix is also simple in principle: new model training methods will also reward abstention, expressing uncertainty, and seeking. Doing so will move reliability from a risk into a model capability. The latest models such as GPT-5 are already much less prone to hallucination than earlier models, and I wouldn’t be surprised if hallucination stopped being a problem a year from now (fingers crossed!).

Why this matters. Tools that cite sources by default, expose confidence, and ask clarifying questions before answering will unlock myriad new school-safe use cases. In truth, even if model progress were to stop today, society would still have decades of work ahead discovering how to make good use of this technology across every dimension. A student’s education is a decades-long effort, and it’s only fair that we plan for their future based on where technologies and societal trends are heading.

3) Safety moved from rhetoric to oversight

Regulators are reacting to real-world incidents, highlighting tragic cases and rising concern about emotional dependency. On 11 Sep, the FTC issued compulsory orders to Alphabet, Meta, OpenAI, Snap, X.AI, and Character.ai asking how they measure and mitigate risks to children and teens. On 16 Sep, the U.S. Senate Subcommittee on Crime & Counterterrorism also held a hearing where parents and experts testified about harms attributed to chatbots.

It’s about time this happened, and these efforts will give rise to laws and frameworks that enable safer and more responsible application of AI technologies. OpenAI posted a special note on teen safety, freedom, and privacy, acknowledging problems within current laws and pledging to increase protection for under-18 users. Clear regulations and measures can help build trust and accountability, which should ultimately boost adoption.

Why this matters. Schools don’t get an opt-out. The real choice is engage and govern versus ignore and let usage proceed without guard-rails. Put AI on the risk register; enable data controls; define age-appropriate red lines; and teach students to disclose when they have used AI. This protects students and staff while adoption grows.

4) AI and the acceleration of progress

This one came out a bit earlier, but it’s too exciting and eye-catching not to share: George Church—the Harvard geneticist and pioneer behind many biotech breakthroughs such as gene sequencing and DNA synthesis—said in an interview he “wouldn’t be surprised” if ageing as a natural cause of death is solved around 2050. This is based on how AI is greatly compressing the time needed to discover new drugs and design new treatments, which means we can engineer billions of years’ worth of evolutionary progress “in one afternoon”. Industry is already moving AI-designed drugs into trials, and pharma is standing up shared AI platforms for discovery.

Why this matters. It’s not enough to debunk myths about AI, we also need to tell hopeful stories so people see that it can be used as a force for good. AI wins International Math Olympiad gold medals and Nobel Prizes, and it’s incredible that students can use essentially the same models to help themselves learn and work smarter. Given current trends of model progress, not using AI (at least a little bit) will soon make as much sense as a nearsighted person rejecting glasses because they don’t want to be reliant on them.

Bonus myth-watch: “95% of Gen-AI pilots fail?”

A widely shared MIT report claimed that virtually all GenAI experiments have zero return made August headlines. I would take it with a pinch of salt, not least because the same lab is actually researching and promoting their own AI agents framework. Below, I let Ethan Mollick’s criticism of the paper speak for itself:

Try this in September

Start where you dislike the work. Pick one task you dread and let AI handle the grunt work while you keep the judgement.

Add this to your prompts:“If anything is unclear or missing, ask me clarifying questions before answering.” It nudges models towards the behaviour reliability research is now rewarding.