June 25' Update

In this edition:

AI Big Picture: Understanding Responsible Use of AI at a Government Level

AI in Action: Practical Example of AI for Debate Preparation

Find Out How Your Students Can Join A One-Week Residential AI Programme This Summer For Free!

Understanding Responsible Use of AI at a Government Level

In short:

Overlapping obligations. Child-safeguarding, data-protection and online-safety laws all touch AI, so compliance is never a single checklist but a constellation of duties.

Layered defence beats silver bullets. Absolute protection against harmful outputs is unrealistic; schools need tuned tools, human oversight and clear user rules working together.

Data stewardship is central. Keeping pupil and teacher content out of model-training loops and proving it is now basic due diligence.

In last month's Good Future Foundation Community Meeting, we discussed what it takes to deploy AI in schools in a way that addresses key ethical risks and complies with legal requirements. Turns out, this is non-trivial, and the way laws are currently written in the U.K. (and around the world) often leaves educators with more questions than answers. This month, we brave the legal quagmire of AI in schools to consider the confusing world of AI ethics and compliance in schools.

The S-A-F-E-T-Y checklist — UK laws in plain English

There are many laws covering different pillars of AI due diligence, so I asked ChatGPT to come up with a mnemonic device for the relevant laws in the UK. The Department of Education is developing a framework to guide schools and tool developers, and I hope there will be some streamlining involved.

Analysis

The requirement to block all harmful AI output is impossible. AI hallucinations are getting better but it’s still going to be a problem in the foreseeable future, so it’s better to think in terms of layers of defence rather than absolute safety. For example, instead of general-purpose AI chatbots like ChatGPT or Gemini, an education-specific chatbot could be instructed to avoid age-inappropriate topics; parent/teacher oversight during AI use is another way to keep risk as low as reasonably practicable.

Keep user data out of the training loop. Conversations with an AI could contain a lot of private information, so user inputs should not feed future model training; this is often a paid or opt-out feature that requires action from IT and individual users.

Start-up risk is real. Many AI EdTech suppliers are new, and competition is fierce. What happens to your school’s data if a company goes under? The recent controversy surrounding DNA data stored on 23andMe reminds us to make sure we can export our data for archiving and that there is a way to delete data from the platform.

Three questions to ask before onboarding an AI tool

Will prompts train future models? If the answer is “no”, where is that promise written?

Do staff, pupils, and parents understand what they can/cannot do, and how their privacy is protected? Who do they call when things go wrong?

Is all evidence in one folder? DPIA, filter-test proof and the complaints flowchart should be ready for inspectors.

Elsewhere in the world

Researchers are discovering new things that AI can do on a weekly basis, so lawmakers can’t even begin to craft enduring and specific laws to guide usage.

In short, no matter where you are, AI due diligence for schools is mostly a patchwork of requirements that attempt to provide transparency, human oversight, plus lots and lots of paperwork. Perfection can wait—documentation can’t. Keep the paper trail alive and you’ll stay ahead of the next upgrade.

Practical Example of AI for Debate Preparation

In Short:

Analog first, AI second. A quick chalkboard mind-map grounds definitions and strategy before any screen lights up.

Tech as an accelerator, not a crutch. AI speeds the process while keeping performance and real-time thinking squarely in students’ hands.

Faced with the motion “Is learning English still relevant in Hong Kong today?” a secondary debate team skipped the usual scatter-shot Googling and trialled a lean AI workflow. One brisk session—analog brainstorm, targeted deep research, auto-outlining, and text-to-speech rehearsal—produced draft speeches for every speaker. By shifting grunt work to machines, students spent their energy on strategy, rebuttals, and stagecraft.

How It Worked

Activity: Team huddled around a chalkboard, unpacking definitions, burdens, and stakeholders. Phones stayed in pockets; a quick photo became the plan.

No AI

Activity: Pairs spent 15 minutes with Perplexity Research Mode and Gemini Deep Research to surface statistics, expert quotes, and counter-examples. Each pair shared 1-2 key findings with the team.

Prompt: “You are a research assistant for a school debate. Motion: Is learning English still relevant in Hong Kong today? 1. List the three strongest claims for and against (≤40 words each). 2. Provide three credible sources per claim (title, year, link). 3.Flag key rebuttals. Return as a table.”

Activity: A Voice Memo captured a 20-minute strategy discussion. Their discussion was transcribed and then organized into a clear outline. Breaking down who will talk about which points using which evidence and what questions they can raise.

Prompt: “Turn this transcript into a concise outline: headings = argument, bullets = supporting evidence, note any action items.”

Activity: Each speaker merged the outline and evidence into a 4-minute script inside ChatGPT. They had to read it out to themselves and edit it (with AI or otherwise) so that it is easy and smooth for them to read out loud.

Prompt: “Draft a persuasive debate speech using our outline. Clear signposting, conversational tone, memorable closing line. Keep it under four minutes.”

“I added this speech to use simpler language. I prefer shorter sentences and repetition of these keywords…”

Activity: I demoed OpenAI.fm to show how text-to-speech playback can highlight pacing, stress, and pronunciation; students later used it for individual practice.

(Tool only)

Impact

“We went from blank page to a first-draft speech in one meeting. That never happens.”

“Because an AI note-taker had our backs, we could focus on testing arguments instead of scribbling minutes.”

As a coach, I loved that the usual “Who’s got evidence for…?” scramble was much diminished; AI-generated evidence tables were instantly available, and made students feel more secure and equipped to speak out. Freed from procedural clutter such as formatting and diarising, the group spent its energy poking holes in each other’s logic and rehearsing delivery. Rapid progress raised engagement—students left buzzing, eager to refine rather than start over.

Take-aways

Keep performance as anchor. Requiring students to stand up and speak ensures AI remains a helper, not a crutch.

Use AI to deepen interpersonal focus. Automated transcription lets teams converse freely and revisit ideas later.

Leverage TTS for flexible listening-and-speaking practice. Playback turns any script into a personalised pronunciation coach, which worked a treat for ESL students.

Next up

How high schoolers used AI to prepare for university interviews when they knew nothing about how universities work.

Get Ready for the Festival of Education!

We have an exciting lineup of sessions and speakers at our booth for this year’s Festival of Education at Wellington College during 3-4 July 2025! Visit our stand to share your aspirations for the future of education and enter to win valuable education resources.

Join our Community Breakfast on Friday morning to connect with fellow educators to learn about our growing network.

We’re also launching our Online Community platform and AI Sandboxing Tool at the Festival! Stop by for a tour, get your invite link, and discover safe and effective ways to use AI with other teachers.

We look forward to seeing you there!

Find Out How Your Students Can Join A One-Week Residential AI Programme This Summer For Free!

In partnership with The National Mathematics and Science College in Warwickshire, we’re launching our first AI-focussed summer programme! From 20-27 July 2025, we’ll bring together 30 passionate students aged 14-17 for an immersive week exploring AI and its real-world impact.

This is a fully funded residential experience for young learners to enjoy hands-on AI workshops and collaborative projects, visits to Bletchley Park and some of the top tech companies, and an opportunity to explore ethical AI use in a supportive and welcoming environment. The programme offers a great mix of theoretical knowledge and practical application.

We are recruiting both students and teachers to join this programme. You can find more details here:

Don’t miss out on the chance to join this fun and exciting experimental initiative!

Deepening Our Impact with the AI Quality Mark

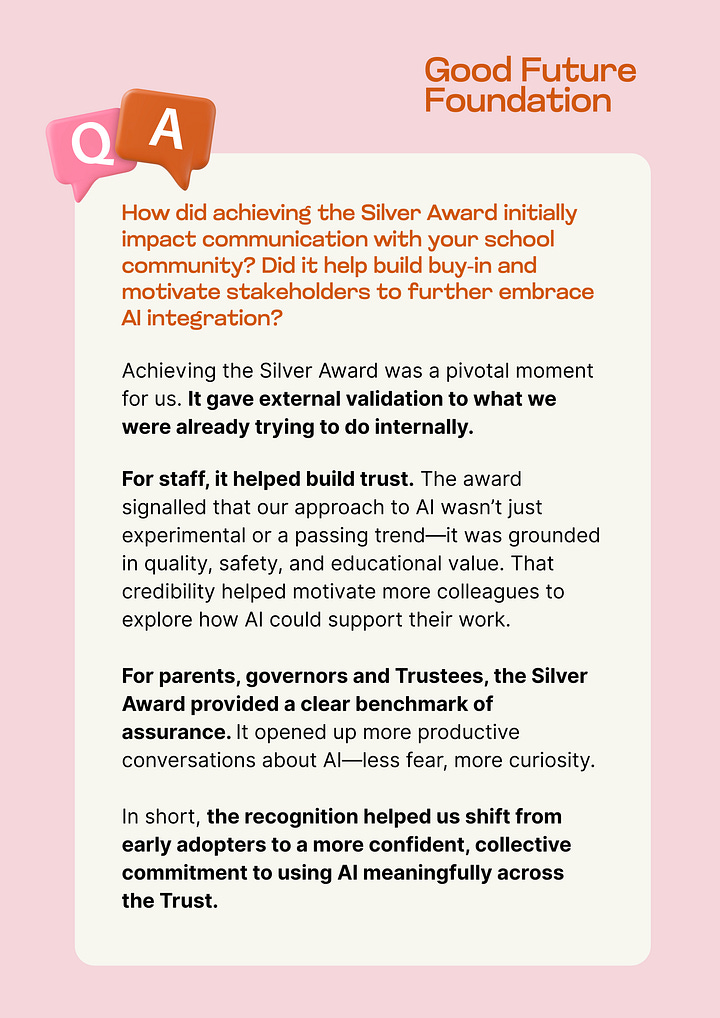

A milestone moment for our AI Quality Mark as we award our first-ever Gold standards to two pioneering school groups. The Woodland Academy Trust, comprising five primary schools, has progressed from being our first Silver recipient last October to achieving Gold status. Joining them at Gold award is The RGS Worcester Family of Schools, a group of four schools. We’re delighted that both organisations are ready to share their journey and experiences to help other schools that are just beginning theirs.

Julie Carson, Director of Education at Woodland Academy Trust reflects on their journey:

The momentum continues to build, with 10 more multi-academy trusts and schools achieving Silver, Bronze and Progress awards since our last update.

To further strengthen our support framework, we’re proud to announce our partnership with MerrickEd consultancy. Victoria Merrick and her team bring extensive school leadership and research experience to help schools embed responsible AI use in measurable and sustainable ways. This collaboration comes at a crucial time as we scale rapidly, with over 30 schools enquiring and engaging with the framework weekly.

The AI Quality Mark remains free for all schools to enrol on. Every school receives tailored support, guided self-assessment, and a pathway towards developing an AI strategy that's right for them. If your school is ready to lead with integrity and purpose when it comes to AI, we invite you to take part in this initiative by registering your interest below.

Tailoring AI Professional Development: Meeting Schools Where They Are

As more schools prioritise AI literacy across their entire staff body, we’re also expanding our professional development offerings. Our tailored sessions support both teaching and non-teaching staff in understanding and implementing AI safely and responsibly in schools.

Free AI Professional Development Days

Trust/ School-Specific AI Workshops

10 June - Christopher Nieper Trust

17 June - London Academy of Excellence Stratford

20 June - Wandle Learning Trust

26 June - Turner Schools

Looking ahead, some trusts have already secured their professional development support for the 2025-26 academic year. To arrange a tailored session for your school or trust, contact our team to discuss your specific needs and preferred dates.

Insightful New Podcast Episodes to Check Out!

In our three latest episodes, we’ve had the pleasure of inviting experts from various fields to share their perspectives on how AI is affecting education within their own professions. Dr. Lulu Shi offers a sociological perspective that challenges the idea that technological progress is predetermined. Bukky Yusuf discusses the ethical considerations surrounding AI adoption. Thomas Sparrow shares the challenges posed by AI-driven disinformation. These conversations are both informative and thought provoking that you won’t want to miss. Listen to these episodes through the link below.

We at Merrick-Ed are delighted to be working with you!