Nov 25' Update from Good Future Foundation

Alongside our organisation updates, our November issue features the reflections of an educator in Dubai who used a custom Copilot agent to enhance her lesson design, and how school assessment is evolving as it becomes increasingly clear that AI detection in homework simply doesn’t work.

Browse the summary sections below and click on whatever catches your interests to learn more!

A Week of Collaborative Learning with Ghanaian Educators

In mid October, our team travelled to Ghana to continue our work with International Community School Ghana, which is led by the inspirational Dr Charles Yaboah. Thomas Sparrow from Deutsche Welle and Laura Knight of Sapio Ltd, both members of the Good Future Foundation advisory council, joined me for this first major project together as a unit. We arrived in Accra on 18th October and spent the evening planning the programme ahead. The purpose of the trip was simple but ambitious: to learn alongside Ghanaian educators, share what we have been researching, and work together across three cities, Tamale, Kumasi and Accra.

We began in Tamale on Monday 20 October, hosted by Savannah International Academy, an independent boarding school in the north of the country. Alongside colleagues from ICS Ghana, we delivered sessions on AI literacy, AI and safeguarding, AI and disinformation and AI for teacher productivity. The Ghanaian team played a crucial role in localising the content so that it would remain useful and sustainable in schools with very different levels of access to electricity, connectivity and hardware. The questions from educators were practical and honest, including how to support students who are already using AI on their phones when schools themselves do not yet have a computer lab or a reliable internet connection.

From there we flew to Kumasi, where over 300 teachers had registered for what became our largest event of the week. We were welcomed at the ICS Kumasi campus by Pilgrim, a teacher who had composed and recorded an original song about the Good Future Foundation and performed it live with a drummer and pianist as attendees arrived, setting a joyful tone for the day. The programme built on the Tamale sessions, with the Kumasi team speaking powerfully about AI and safeguarding through a Ghanaian lens and helping us address questions about implementation in challenging conditions. Again, the focus was on how to create space for dialogue with students about responsible AI use in their everyday lives, as opposed to being about subscriptions and access to expensive tech.

Our final day of training took place back in Accra on Friday 24 October, hosted by ICS Accra. The themes mirrored those we had explored earlier in the week, with workshops and seminars on AI literacy, safeguarding, disinformation and teacher productivity, all anchored in responsible use and best practice. During the day, Joy News, a national broadcaster in Ghana, visited to film and interview me and Dr Yeboah, giving wider visibility to the work and to the leadership role ICS Ghana is taking in this space. Throughout, the ICS teams in Tamale, Kumasi and Accra were outstanding collaborators and made sure the work was rooted in the realities of their schools and communities.

We flew home on the evening of 24 October and landed back in the UK on 25 October with a great deal to reflect on. At every stage this project was about collaboration; the educators we worked with in Ghana shaped the content as much as we did, and the strength of the programme came directly from that shared ownership.

Huge thank you to Laura Knight, Mathias Lawluvi, Potence Tetteh, Ernestina N. Addo, Thomas Sparrow, Enoch Oye, Emmanuel Awuah Pilgrim, Daniel Benson Sarfo, Dr Charles Yeboah and the ACS and ICS Ghana teams for their continued involvement and contributions, and to all who attended.

Our hope now is to build on these relationships and continue developing a community of practice that stretches well beyond a single week in October. We look forward to keeping you posted with future developments!

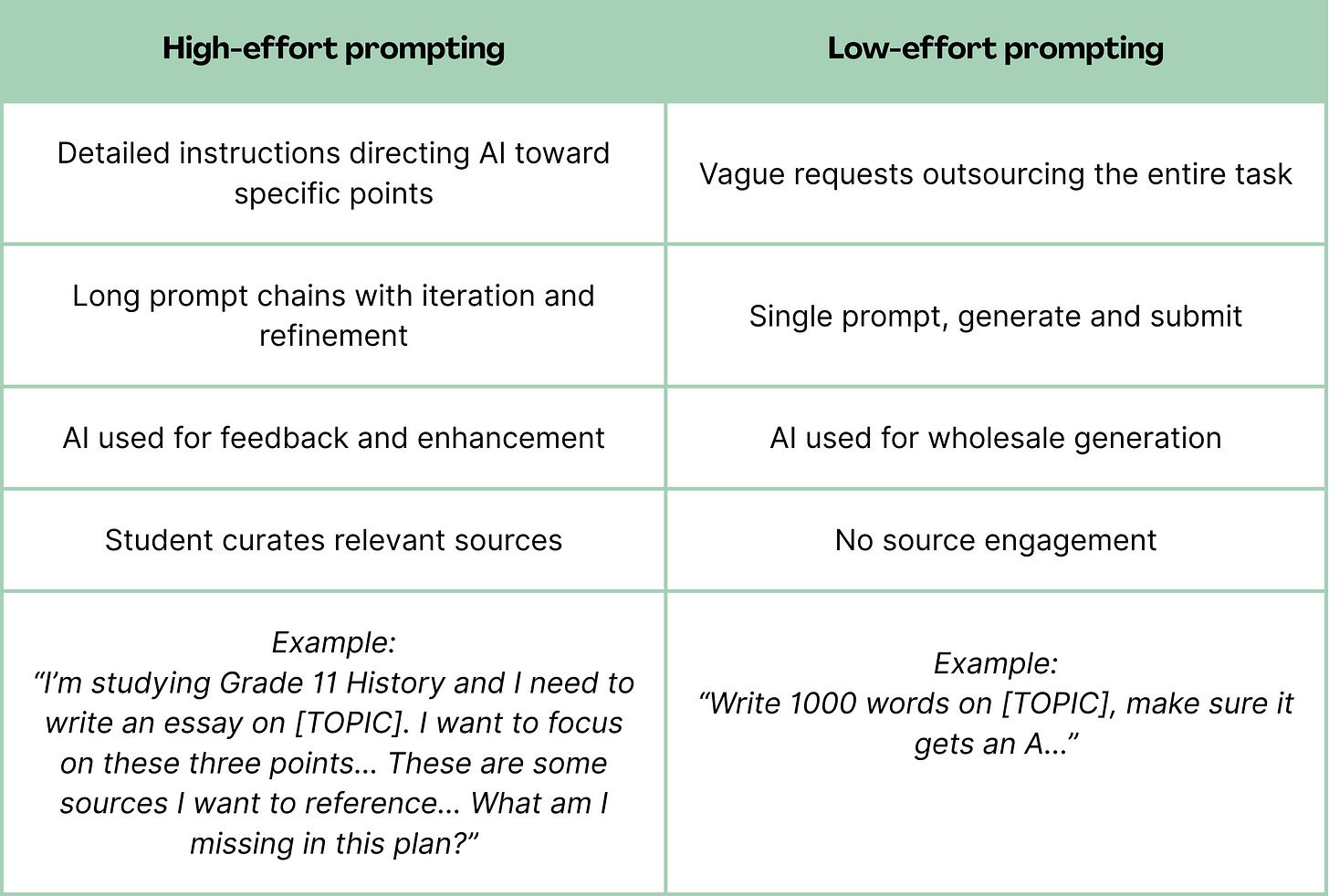

Rethinking Assessment When Detection Fails

In Short:

Andrej Karpathy recently told a school board that AI detection in homework is fundamentally impossible—and initiatives like Google’s SynthID watermarking are still a long way from being reliable.

Short-term reality: In-person assessment—exams, presentations, interviews—remains the most reliable proof of skill.

Long-term direction: Move from single-artifact to multi-artifact assessment. Collect drafts, prompts, and reflections alongside final work to make student thinking visible.

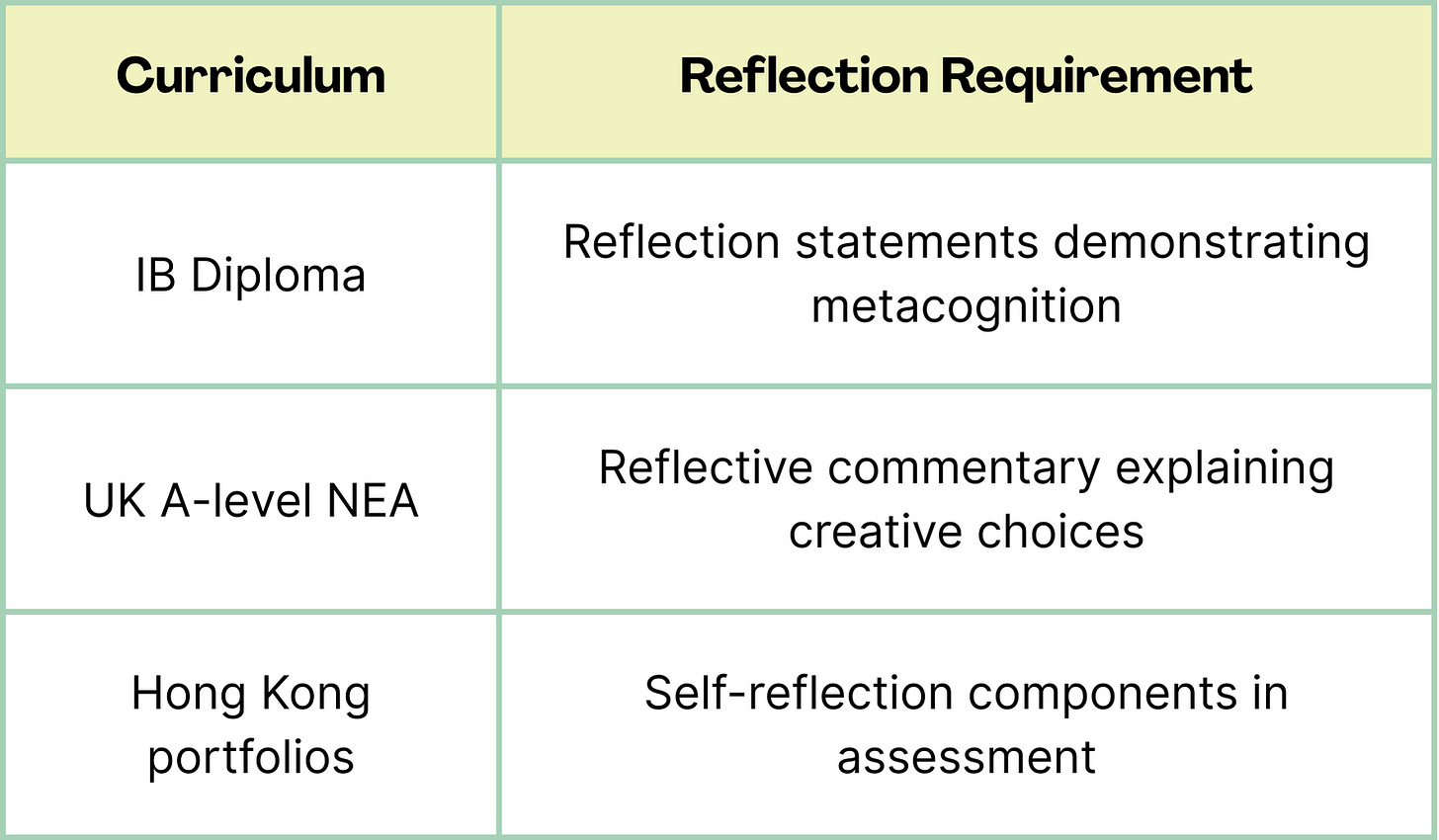

The building blocks exist: IB reflection statements, UK A-level NEA commentaries, and portfolio assessment in Hong Kong all point the way forward.

Andrej Karpathy—OpenAI co-founder and former AI director at Tesla—recently spoke at a Seattle school board meeting about AI in education. His message was direct: you will never reliably detect AI use in homework. AI detectors don’t work, can be defeated, and are fundamentally doomed to fail. For educators still hoping better detection tools will solve this problem, that’s a sobering verdict from someone who understands these systems deeply.

What about watermarking? Google’s SynthID embeds invisible statistical patterns into AI-generated content that survive light editing, and they open-sourced the technology in late 2024. But watermarking only works if every AI conforms to the same standard. Students can paraphrase, run local models, or use non-compliant tools. Detection, in any form, remains a losing game.

The short term: In-person assessment

The most reliable proof of skill is assessment where teachers directly observe student thinking:

Sit-down exams — controlled, unassisted writing

Presentations — students explain and defend their work

Interviews — teachers probe understanding through dialogue

Karpathy recommends shifting the majority of grading to in-class work. Schools should communicate clearly: unassisted assessments will remain core. Students facing school-leaving exams—A-levels, IB, GCSE—already know they need to perform independently when it counts.

The long term: From single artifact to multi-artifact

How do we assess take-home work in ways that capture genuine learning? Let’s focus on essay-based assessment, which is unarguably the most threatened by AI-generated text.

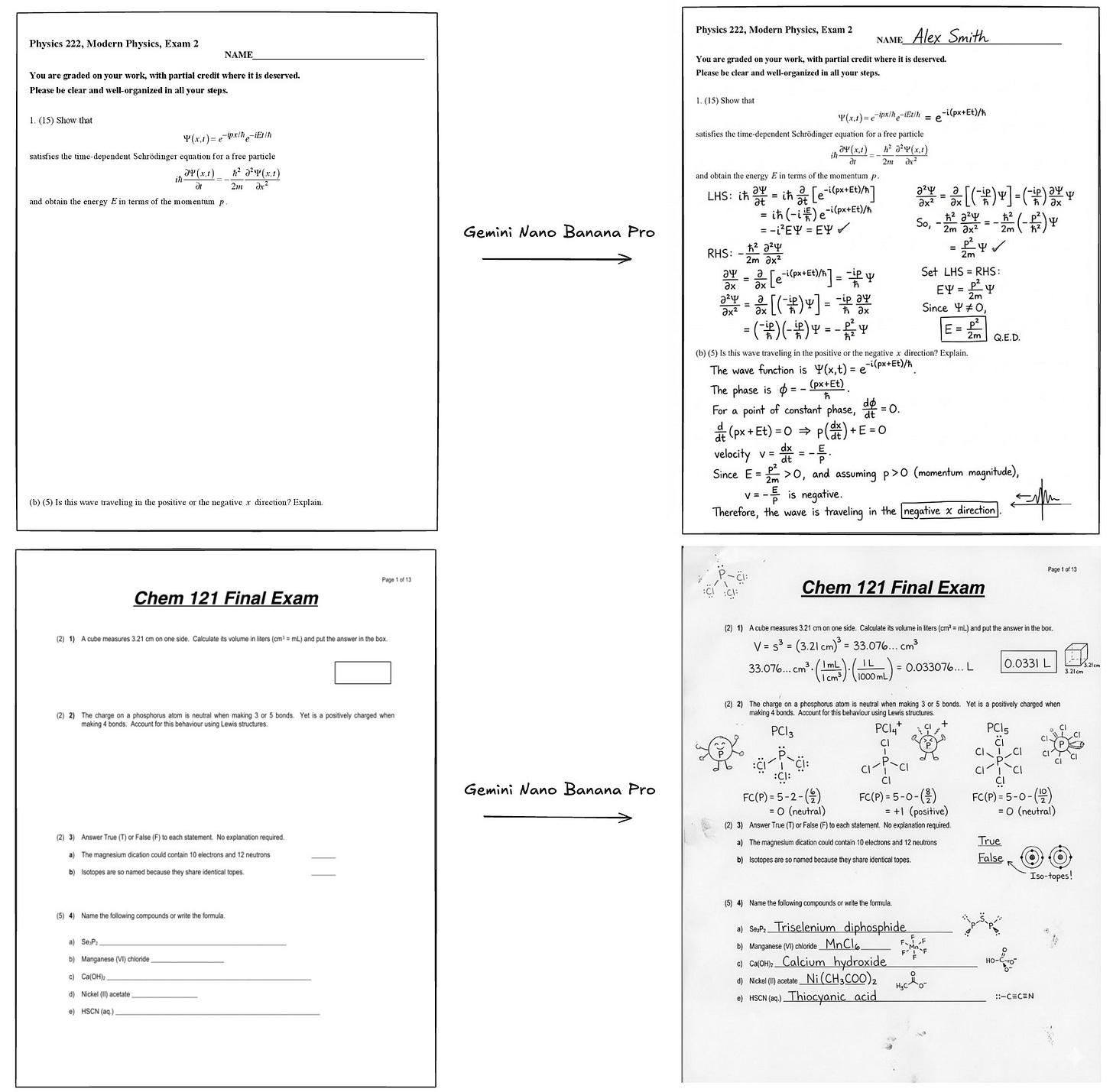

Educators have been thinking about this for some time. In 2023, Ethan Mollick and Lilach Mollick at Wharton published research on incorporating prompts into assessment. Existing curricula already embed these principles:

In an ideal world, a practical model for AI-assisted take-home essays might collect:

Final essay

Earlier drafts showing progression

Prompt history documenting AI interactions

Short reflection on what changed and why (this document can also serve as AI usage declaration)

Practically, not every assignment needs all four—a final essay with prompts may suffice, although a reflection statement that includes AI usage declaration is good practice that deserves the extra effort to institutionalise. The point is shifting from evaluating output to evaluating thinking.

One frustration worth naming: exam boards like the IBO published initial AI guidance but have not followed up with implementation frameworks. That silence has been disappointing. Schools are largely figuring this out on their own.

What does “good” look like?

If we’re asking students to submit prompts, what distinguishes thoughtful from superficial? The difference is more visible than you might expect:

In my own practice at the Hong Kong Academy for Gifted Education, we have used student prompts to analyse learning. The difference between high-effort and low-effort prompting is immediately visible—it takes very little training for teachers to spot it.

Getting started

Would increasing the number of artifacts collected increase teacher workload? I don’t think so, because teachers will increasingly use AI to assist with assessment. As many teachers have already found out, attaching a clear rubric, assessment descriptions, and course outlines is enough for an AI to give high quality feedback and assessment on a variety of subjects.

The real shift is from marking final outputs to reviewing prompts and reflections—evaluating thinking, not just product.

Pilot first: Try multi-artifact submission with older students, for one assignment, before scaling

Use school-sanctioned tools: They provide a stronger audit trail than personal ChatGPT exports

Start with higher forms: Students facing school-leaving exams already have incentive to develop independent skill

Using AI to Plan, Teach, and Reflect - Custom Copilot Agent Transforms Lesson Design at GEMS Winchester School, Dubai

In Short:

Teachers use a custom Copilot agent to design lessons aligned with cognitive science

Using AI to strengthen instructional precision, reduce admin burden, and embed

evidence-based teaching techniques

Apply Willingham’s Memory model, Rosenshine’s Principles of instruction, retrieval Practice, Cognitive science systematically

Reflect on how AI amplifies teacher expertise without replacing pedagogical judgment

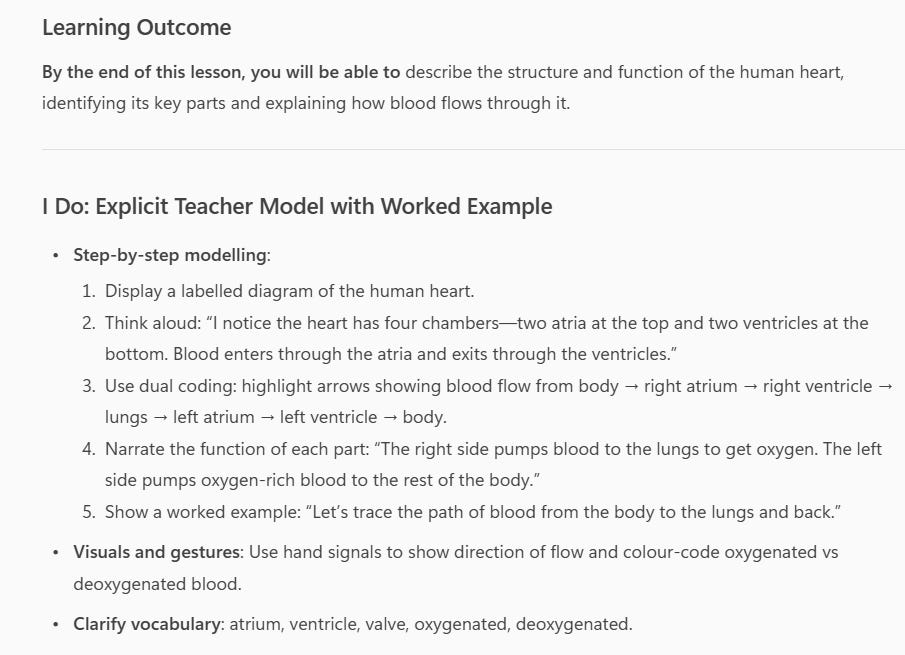

At Gems Winchester School, we are innovating how lesson planning looks in an AI age. Our teachers have been using a custom Copilot agent, a purpose-built tool that transforms how educators design instruction and embed the science of learning into daily practice. This AI agent is structured around Daniel Willingham’s cognitive principles and Doug Lemov’s proven classroom techniques, which our school has adapted into our planning and delivery model. With intelligent prompting, teachers turn every planning session into an opportunity to develop essential instructional skills: precision, consistency, differentiation, and reflection. The agent guides teachers through every stage of lesson design, helping them move from “filling in a template” to “thinking pedagogically through.” Teachers hence use AI to deepen instructional quality and practice deliberate planning.

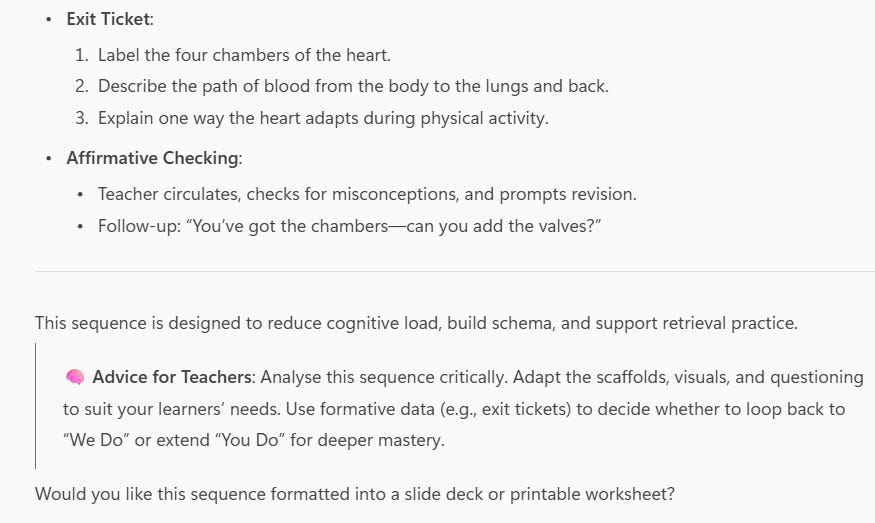

From Theory to Practice: How It Looks in the Classroom

Teachers explored the AI agent across several planning scenarios:

Curriculum-Aligned Outcomes: Inputting year group and topic (e.g., “Year 6 Circulatory system”) and receiving pre-generated learning outcomes focusing on procedural knowledge or skills to be gained and “To Know” essentials that are factual or conceptual knowledge components aligned with our curriculum standards.

Technique Integration: The agent suggests specific Lemov’s techniques at strategic points (e.g., “Use Cold Call after explaining mitochondria function to ensure all students are processing the concept”).

Cognitive Load Management: Lessons are automatically structured to avoid working memory overload, with content chunked appropriately and retrieval practice embedded at intervals.

Generating feedback comments: Copilot agent creates feedback comments that help students correct and improve their responses and suggest a follow up challenge question.

Each activity reinforced the same core idea: better planning leads to better teaching, and better teaching leads to better learning outcomes. Teachers use P.R.O.M.P.T : (Precise, Role-Based, Outcome-Oriented, Medium-Specific, Provide Context, Test & Refine ) framework to query custom copilot agent. A pre - designed prompt bank is developed and integrated with copilot agent to support teachers with using the agent.

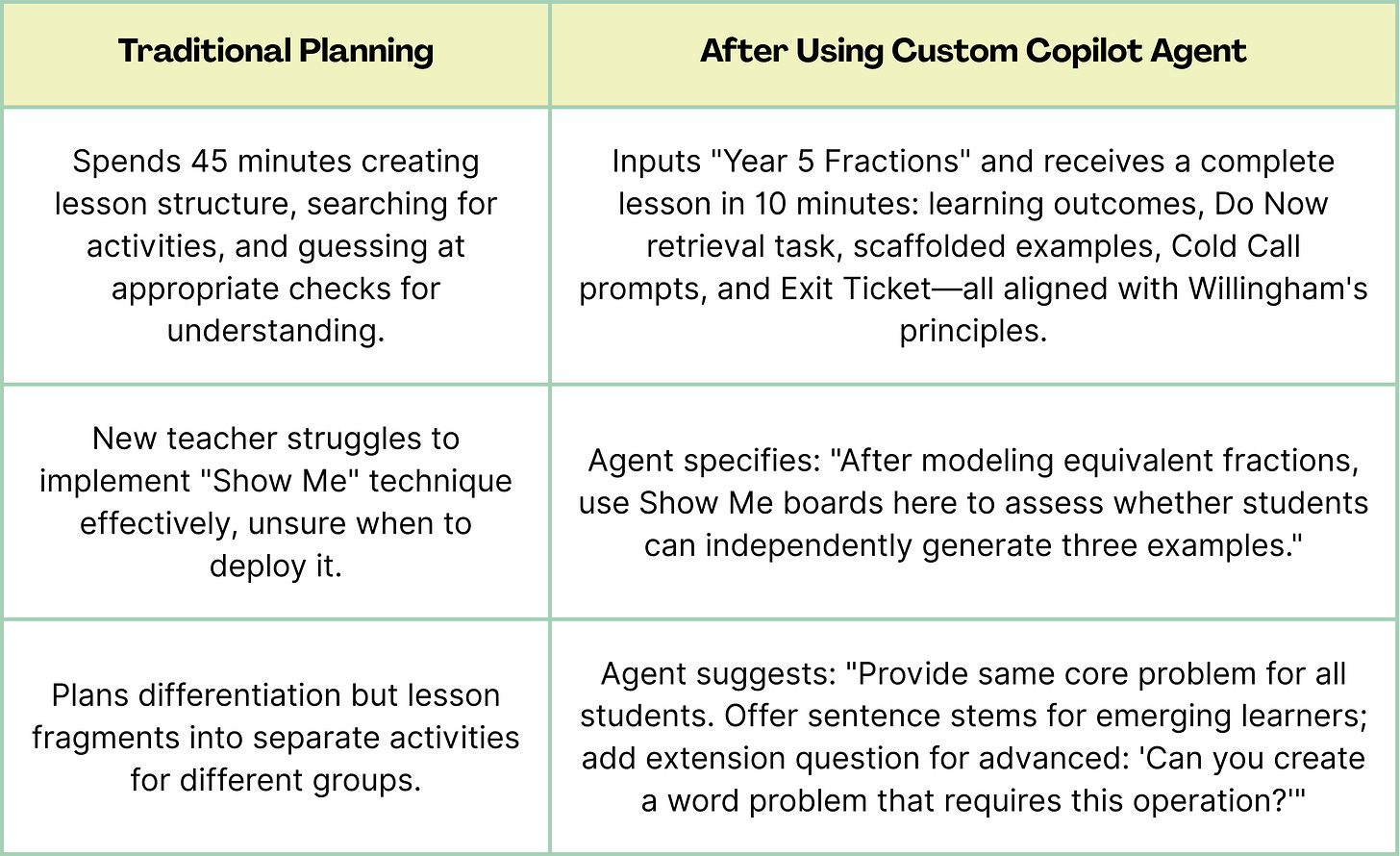

Real Teacher Scenarios: Before & After Using the Agent

After using the agent, teachers quickly noticed how AI-embedded cognitive science transformed their instruction from generic to genuinely impactful.

Metacognition Moments

Reflecting after implementation, teachers shared insights about their planning process, what we call Metacognition Moments:

“I realized my original lessons weren’t strategically checking for understanding. The agent showed me where to pause and assess, not just at the end.”

“When I use the Copilot agent, my lessons feel impactful. It’s like having an instructional coach reminding me why each component matters.”

“Seeing Lemov techniques embedded in context helped me understand when to use them, not just what they are.”

These reflections align beautifully with WSD’s commitment to evidence-based teaching, purposeful AI integration for learning & teaching and embedding the science behind instruction into every planning session.

Why a Custom Copilot Agent Matters?

Emerging research tells us that AI integration in education is not just a nice-to-have—it’s transformative when purpose-built. Generic Most AI tools lack pedagogical grounding, but custom agents trained on specific instructional models can systematically apply what we know works. Our agent embeds:

Willingham’s cognitive principles: Lessons are designed to activate prior knowledge, manage working memory, and promote long-term retention through spaced retrieval.

Lemov’s techniques: Strategic deployment of Cold Call, Check for Understanding, Ratio, and other high-leverage moves at optimal moments.

School-specific adaptations: Our refined approaches to adaptive teaching, assessment, and curriculum sequencing.

In our whole-school instructional program, we emphasize the cycle of plan → teach → reflect → refine. Using AI in planning mirrors that cycle: teachers review agent output, personalize for their students, teach the lesson, and reflect on effectiveness. So, the Copilot agent becomes an extension of professional development, not a separate add-on.

The Benefits: Time, Precision, and Growth

Time Savings: What once took 45 minutes now takes 10. Teachers review, adjust, and personalize rather than building from scratch.

Instructional Consistency: Every teacher, regardless of experience, plans lessons rooted in cognitive science and proven techniques.

Reduced Cognitive Load for Teachers: With planning streamlined, teachers have bandwidth for responsive teaching, the real-time adjustments that no AI can predict.

Continuous Professional Learning: Teachers see exemplar planning daily, internalizing the science of learning through repeated exposure.

Focus on art of teaching: By handling structural rigor, the agent frees teachers to focus on relationships, culture-building, and in-the-moment pedagogical decisions.

Looking Ahead

Going ahead, teachers will extend their use of the Copilot agent across subjects from science to humanities to languages, applying evidence-based strategies in every lesson they design. At GEMS Winchester School, purposeful and pedagogically grounded AI drives our approach to innovation. We are guiding teachers to plan with intention, integrating AI carefully and strategically into the heart of meaningful instruction. Leaders ensure AI enhances teaching with purpose, precision, and reflection; never replacing teacher expertise, always amplifying it.

References

Willingham, D. T. (2009). Why Don’t Students Like School? A Cognitive Scientist Answers Questions About How the Mind Works and What It Means for the Classroom. Jossey-Bass.

Lemov, D. (2015). Teach Like a Champion 2.0: 62 Techniques That Put Students on the Path to College. Jossey-Bass.

Zawacki-Richter, O., & Anderson, T. (2025). AI Integration in Educational Practice: Implications for Teacher Planning. Educational Technology Review, SpringerOpen.

12 Schools and MATs Awarded their AI Quality Mark in November

What an incredible month to celebrate another 12 organisations, representing 18 schools, obtaining the AI Quality Mark awards! They are:

Gold Award: Haileybury College, Northlands School

Silver Award: Aylesbury High School

Bronze Award: Rushall Primary School, Minerva’s Virtual Academy, The Diocese of Chelmsford Vine Schools Trust

Progress Award: Canvey Junior School, Crossflatts Primary School, Dudley Academies Trust, Purford Green Primary School, Maltby Academy, and St Bartholomew’s Primary Academy

We deeply appreciate all these schools and multi-academy trusts for taking the time to review their current AI initiatives using our framework and completing their self-assessments. We also want to extend our heartfelt gratitude to our assessors, mostly educators and senior school leaders, who dedicate their expertise to reviewing submissions and providing genuine feedback and practical guidance to help these schools take meaningful next steps towards safe and effective AI integration.

Bi-monthly Teachers Community Meeting

Every two months, we host an one-hour online session to allow teachers who are navigating AI to come together to share best practices. The upcoming session will be at 4-5pm on 17th December (Wednesday). We have invited two schools who have recently achieved the Gold Award to share what they are doing in terms of supporting teachers and students on responsible AI use.

Lex Lang from Caterham Prep School will share how they develop their AI policy and strategy with their whole school approach. Tom Wade from Haileybury College will talk about the support and guidance they provide their high schools students and how they integrate AI into their curriculum. We will also set aside time for teachers joining the meeting to raise questions and hear other teachers’ experience.

Please sign up here to receive calendar invite and meeting link.

Free Bite-sized AI CPD Drops on Responsible AI Teaching

Our five-part series of AI CPD Drops on Responsible AI Teaching, created in partnership with STEM Learning, is now fully available now and free to access on both the STEM Learning and Good Future Foundation community platforms! These resources have been developed with the input from secondary and primary teacher focus groups to address the real challenges faced in the classroom.

The first three sessions focus on discussing responsible digital citizenship, introducing age-appropriate content, and explaining AI to primary students. The final two sessions explore how to prepare secondary school students for AI use in unsupervised environments and help them prepare a changing world of work shaped by AI.

These bite-sized CPD sessions are designed to fit into your schedule. Listen while commuting, walking dogs, doing household chores, or whenever you have a spare moment. After listening, we’d love to hear your thoughts on how you might implement these approaches in your teaching. And don’t forget to complete this evaluation to receive your digital badge and certificate.

New Episodes on Foundational Impact Podcast

Two other fascinating episodes on this month’s Foundational Impact!

We’ve invited Muireann Hendriksen, Principal Research Scientist at Pearson to share the insights from her team’s recent research study “Asking to Learn”, where they analysed 128,000 AI queries from 9,000 student users to understand how AI might scaffold students toward higher-order thinking and deeper understanding.

In another episode, we have the pleasure to speak with the founder of Stick’Em, the winning team who just claimed the $1 million from this year’s Hult Prize, the world’s largest student startup competition. We interviewed their founder, Adam, both before and after he won the award. Tune in to hear why making STEAM education accessible is crucial for preparing children to thrive in an AI-infused world, and learn how he plans to leverage AI to scale his social enterprise following this achievement.

You can now watch the highlights from these conversations on YouTube, and listen to the complete episodes across all major podcast platforms.