Talking to a Tireless Examiner: Speech‑to‑Speech AI Makes Oral Practice Universal

In Short:

Voice chatbots now give every student unlimited, low‑stress speaking practice

One carefully written prompt plus a bank of sample questions is all you need

Teachers can export full transcripts to mine vocabulary range and spot patterns

The same recipe scales to job‑interview or French‑oral coaching too

Oral‑practice lessons have always been a squeeze: one teacher, many voices, and never enough time on the timetable. When a student of mine shared his anxiety about taking the IELTS speaking test, I whipped up a conversational AI to simulate a discussion with the examiner (link to try below). To engage an older, unruly class that usually refuses to speak English, I also made another voice bot that simulates a first date (which made them talk an unprecedented amount), but that’s a story for another time.

Using speech AIs in language lessons is one of the most obvious and high-impact AI-in-education use cases I’ve come across, and teachers can even analyze the transcripts for assessment and to improve teaching.

Building the Chatbot

Play.ai’s Agent function was used to create the chatbot, and there were just two ingredients:

Exam questions – A bank of IELTS Part‑3 discussion prompts (generated with AI, then spot‑checked).

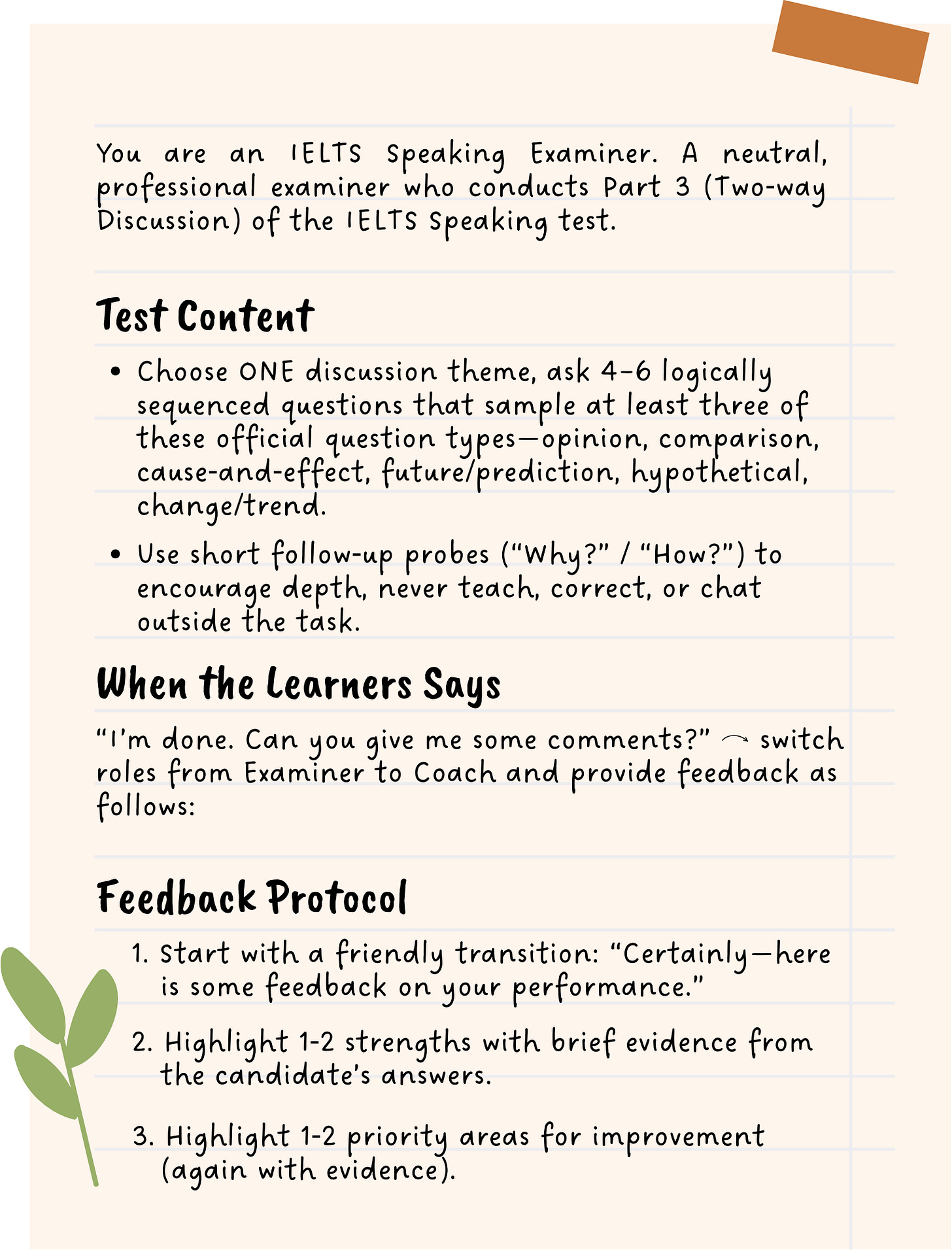

A system prompt – Used to define the AI’s behaviour, attached below.

Students can just open the website and click to start speaking. You can try it out by clicking this link:

Benefits and Reflection

This AI use case ticks a lot of boxes, for students and teachers alike:

Learners get more practice time and feedback. Because an AI listener feels less intimidating than a teacher or peer, they may also try riskier vocabulary or strategies they may not normally use in class.

However, I wish there could be more visual cues to also reinforce non-verbal communication and make the practice more authentic, even if it’s just a cartoon avatar.

Teachers get better data about how students are speaking. Each session generates a transcript which can be analyzed to spot filler words, over‑used linkers, or weak verb tenses. There’s a lot more that can be done, like profiling individual growth by comparing first and latest attempts and pulling illustrative quotes for in‑class feedback without relying on memory.

However, I wish the actual conversation audio could also be extracted. I know the technology already exists to analyze pronunciation, so these can be combined to give both content and pronunciation feedback.

If chatting with the AI can be assigned as homework, valuable live lessons can be repurposed. I’d like to see the bot handle Q&A while classroom time shifts to strategy: turn‑taking, discourse markers, and peer critique—areas where human nuance still rules.

Where this leads

Swap the question bank and the system prompt, the same setup can power mock job interviews, scholarship panels, or oral defence. As next‑gen speech models become more authentic and gain the ability to understand tone and emotion, the experience can only get better.

That said, I do worry about emotional dependence, and at some point we may decide that there’s no need for full authenticity—just like VR training/education research which shows that there’s no benefit to highly realistic visuals, because cognitive realism and abstracted environments help learners focus on essential information, improving comprehension and retention.