Why Does AI Cost So Much? What Does the Money Get You?

In short:

Most people don’t have an accurate grasp of AI progress because free models lag far behind the state of the art.

Paid AIs can outperform free models by a wide margin (e.g., 43-point IQ gap), creating equity concerns in classrooms.

Contrary to older “tell-tale signs” of AI fakes, advanced systems have fewer flaws—and the first wave of AI literacy content may already be out of date.

The cost of AI is dropping fast, so while early adopters race ahead, others may still catch up in months rather than years.

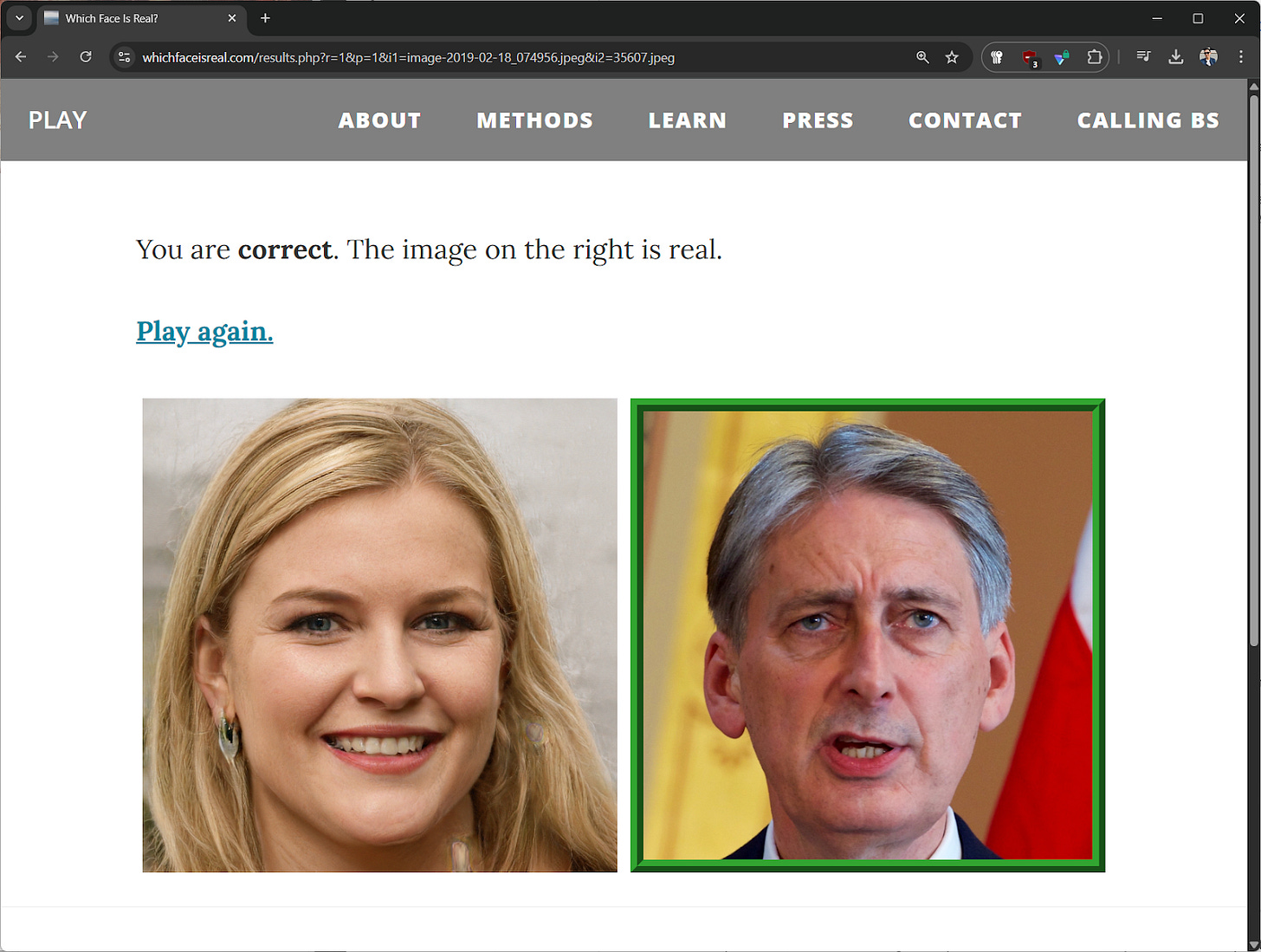

I recently gave a school talk about information literacy in the age of AI, and we played with the Which Face is Real quiz from University of Washington’s Calling Bullshit project. The students were about 50% accurate when choosing AI-generated fakes, and the ones who did better than average were eager to point out telltale signs such as asymmetrical earrings, scrambled letters or logos, and other random defects.

But there were also those who got all of them wrong, who struggled to identify AI fakes even after learning about the usual tells.

It gets worse: the images in the quiz were generated with the seven-year-old StyleGAN model, which is ancient by modern AI standards. As of 2025, it is trivial for models to generate pictures indistinguishable from reality, although many platforms block that capability with safety filters. However, intensifying competition and complaints from users around censorship have driven model providers to relax those guardrails, as OpenAI and xAI have done in the past month. Nowadays, it’s easy to generate AI images that easily pass as real, especially if you’re just scrolling past them on social media:

The Good Future Foundation has a paper in the works on AI and misinformation, so I defer to my colleagues to explore that aspect. Instead, I’d like to highlight that this phenomenon of paid AIs being more powerful than free ones is even more true for text generation, which means well-to-do students can get a significantly more advanced tutor, which is also harder for AI detectors to flag. (That said, AI detectors don’t work, and many experts recommend banning them.)

Case in point, consider the staggering 43-point IQ gap between GPT-4o (the default model on ChatGPT’s free plan) and o1 Pro (which is exclusive to ChatGPT Pro users paying 200USD/month):

This paywall on intelligence is a problem not just because of inequity; because free AIs are often a few steps behind cutting-edge models, most people don’t have an accurate grasp of just how powerful and disruptive AI models have already become:

A Jan’25 study found that people rated AI responses as more empathetic than mental health experts, including crisis hotline responders who were trained to respond to psychological crises.

OpenAI’s expensive o1 model got a “perfect score” on a Carnegie Mellon undergraduate math exam. The professor who designed the problems noted that this cost “around 25 cents, for work that most people can't complete in 1 hour.”

An upcoming paper from Microsoft found that AI had changed “the nature of critical thinking” from “information gathering to information verification”, from “problem-solving to AI response integration” and from “task execution to task stewardship”.

What Does This Mean?

Turns out, you do get what you pay for when it comes to AI, and we are nowhere near the point of diminishing returns because there are now two clear paths for AI to become smarter (by training larger models, and by letting models reason for longer).

I’m an optimist, and I think AI tutors will solve Bloom’s two sigma problem: the cost of AI has been falling 10x every 12 months, so while early adopters can definitely race ahead, most others can still catch up in months rather than years.